I have now taken 5 DNA microarray (chip) tests with Family Tree DNA, 23andMe, Ancestry DNA, MyHeritage DNA and Living DNA. I have also taken two Whole Genome Sequencing (WGS) tests with Dante Labs, one short-reads and one long-reads.

I analyzed the accuracy of these tests by comparing individual SNP values in my article Determining the Accuracy of DNA Tests. The chip tests don’t all test the same SNPs, but there’s enough commonality that they can be compared, and an error rate can be estimated. For my chip tests, that error rate turned out to be less than 0.5%.

The WGS test results don’t give you individual positions. They give you a set of reads, which are segments that are somewhere along the genome. Short read WGS tests give you segments that may be 100 to 150 bases long. Long read WGS tests can give segments that average 10000 bases long with the longest being into the megabases (millions of bases). But you don’t know where those segments are located on the genome.

To determine where the WGS reads are on the genome, there are two methods available:

1. Alignment: Each of the reads are matched to where they are best located in the human reference genome. The WGS testing companies often do the alignment for you and give your results to you in a BAM (Binary sequence Alignment Map) file. The alignment cannot be perfect because

- You have variants that are different from the human reference genome as well as INDELs (insertions and deletions),

- The WGS data has errors in the reads, sometimes changing values, adding extra values or deleting values.

- The algorithms used for alignment are not perfect and sometimes make assumptions.

Comparing my BAM file results from my short read WGS test using the BWA alignment tool, the SNPs I could compare were even more accurate than my chip tests with an error rate of less than 0.1%. That sounds very good, but still 1 in 1300 results were wrong, meaning in 700,000 SNPs, there could be 500 errors.

The WGS_Extract tool that I was using to extract the SNP values from the BAM file didn’t handle INDELs properly so I couldn’t check the accuracy of those. Despite its high accuracy for individual SNPs, short read WGS tests are not as good at identifying INDELs correctly, e.g the YouTube video (see below) states 85% to 95% accuracy which is a high 5% to 15% error rate.

For my long reads WGS test, I had two alignments done, one using a program called BWA and one using minimap2 which was supposed to be better for long reads. I was very disappointed to find a quite high error rate on the SNPs I could compare, which was 7.7% and 6.6% for the two programs.

Thus, alignment techniques and the algorithms that implement them are not bad, but they are far from perfect. They match your reads to a reference genome and have to assume that the best fit is where your read goes.

2. De Novo Assembly, or just Assembly: This is where you only take the WGS reads themselves, and match them up with each other, piecing them together like a big picture puzzle.

Actually, it’s tougher than a picture puzzle. The best analogy I’ve seen is it’s like taking 100 copies of today’s issue of the New York Times newspaper, and shredding them into small random pieces where you can only see a few words from a few lines. Just to make it a bit more difficult, half the papers are the morning edition, and half are the afternoon edition, where 90% of the articles are the same, but the other 10% have a different article in the same location in the paper. On top of that, somehow one copy of yesterday’s paper accidentally got in the mix. Now you have to reassemble one complete newspaper from all these pieces. And as a bonus, try to create both the morning edition and the afternoon edition.

You likely will mix up some morning edition articles with some afternoon edition articles, unless you get some pretty big pieces that include 2 of the same edition’s articles in that piece. (Think about this!)

So the two editions are like the your paternal and maternal chromosomes, and one copy of the previous day’s paper are like a 1% error rate that your reassembling has to deal with. Add in shredded versions of six different issues of the newspaper for a 6% error rate.

A genome assembler matches one read to another and tries to put them together. The longest stretches of continuous values that it can assemble are called contigs. Ideally, we would want to assemble 24 contigs for the 23 autosomal chromosomes plus the mitochondrial (mtDNA) chromosome. A male will have a 25th, that being his Y chromosome.

When assemblers can’t connect a full chromosome together (which none can do yet for humans), you can run another program to use a technique called scaffolding to connect the contigs together. That is done by mapping the contigs to the human reference genome and using the human reference genome as the scaffolds (or connections).

Assembly with short read WGS has not been able to give good results. Similar to alignment, the reads are too short to span repeats, and thus give way too many contigs. Long reads are normally used for assembly, and despite their high error rate for individual base pairs, sophisticated error correction techniques and minimum distance algorithms have been developed to do something reasonable. However, chromosome-scale configs are still not there yet, and many smart researchers are working to solve this, eg. this article from Nov 2019 describing a method using a connection graph.

I attempted an assembly of my long reads WGS about 6 months ago using a program called miniasm. I let it run on my computer for 4 days but I had to stop it. So I waited until before a 2 week vacation and started it, but while it was running my computer crashed.

I realized that this is too long to occupy my computer to do an assembly that likely will not give good results. And I was not happy running it in Unix on my Windows machine. I was interested in a Windows solution.

Algorithms for Genome Assembly

I’ve always been a programmer who likes the challenge of developing an algorithm to solve a problem. I have a BSc Honours in Statistics and an MSc in Computer Science, and my specialty and interest was in probabilty and optimization.

I have developed and/or implemented many computer algorithms, including detection of loops in family trees for Behold, matching algorithms in Double Match Triangulator, simulation of sports and stock market performance (winning me over $25,000 in various newspaper contests) and from my university days: my at-the-time world class chess program: Brute Force.

Currently for the next version of Behold, I am implementing a DNA probability of match and conditional upon matching expected match length for autosomal, X, Y and mtDNA between selected people and everyone else in your family tree. In doing so, I have to also determine all ways the selected people are related and statistically combine the results. All this data will be available if the user wants along with all the ways these people are related. It should be great.

But back to genome assembly. The problem with assembly algorithms today is that they have to use long reads, and long reads have very high error rates. So they must attempt to do some sort of approximate matching that allows for errors and then uses the consensus approach, i.e. that takes the values that most reads aligning to the same position agree on. It is not clean. It is not simple. There is a lot of error correction and many assumptions must be made.

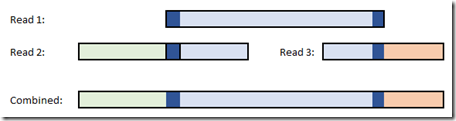

Ahh, but wouldn’t it be simple if we could just take one read, and match the start to another read and the end to a third read. If you have enough coverage, and if the reads are accurate enough, then this would work fine.

In fact this is how they put together the first human genomes, painstakingly connecting the segments that they had one by one.

But alas, the long reads WGS tests are not accurate enough to do this. So something else had to be done.

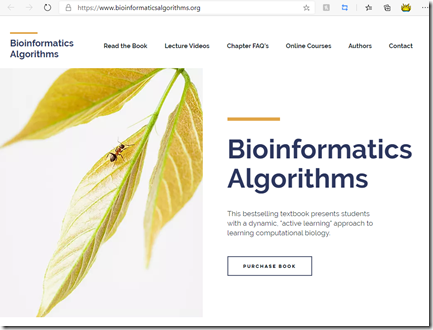

A couple of months ago, I discovered a wonderful online book called Bioinformatics Algorithms, designed for teaching. The entire text of the book is available online. You can also purchase the book for yourself or for your class.

Chapter 3 is :How Do We Assemble Genomes? That is where I got the exploding newspaper analogy which I expanded on above. The chapter is amazing, turning the problem into graph theory, bringing in the famous Königsberg Bridge Problem, solved by mathematician Leonhard Euler, and explaining that a de Bruijn graph is the best solution for error prone reads.

This looked like quite a task to implement. There are many assembly algorithms already developed using this technique, and I don’t think there’s anything I can do here that those working on this haven’t already done.

Accurate Long Reads WGS!!!

Also a couple of months ago, another innovation caught my attention. The company PacBio developed a DNA test they call PacBio HiFi SMRT (Single Molecule, Real-Time) WGS, which are both long reads (up to 25 kb) and are highly accurate (about 99.8%)

Whoa! The world has just changed.

No longer was extensive error correction required. The video above talks about the HiCanu assemblers and how they were modified to very much take advantage of this improved test. Not only that, but the practise of using short reads to “polish” the data is no longer required, and is actually discouraged with HiFi reads as the polishing actually can introduce errors.

What does this mean? Well, to me this indicates that the original ideas of simply connecting ends might just work again. I have not seen any write-up about this being attempted anywhere yet. The assembly algorithm designers have been using advanced techniques like de Bruijn graphs for so long, they might never have thought to take a step back and think that a simpler solution may now work.

So I thought I’d take that step back and see if I can develop that simpler solution.

A Simple Single-Pass Assembler for Windows

For 25 years I’ve developed software using the programming language Delphi on Windows. Most bioinformatics tools are written in Python for Unix. I’m too much of an old horse who is too busy to learn new tricks. So Delphi it will be for me.

The algorithm with perfect reads seemed fairly simple to me. Make the first read a contig. Check the next read. Does the start or end of the read match any anywhere within the contig? If so, extend the contig. If not, make the read a contig. Continue sequentially just one time through the reads and after the last read, you should be done!!!

Once I got going, I only found it slightly more complicated than that. You also had to check if the start and end of the contigs matched anywhere within the read, and also if the read contained the contig or the contig contained the read. I set a minimum overlap length thinking that I’d want to ensure that the read and the contig matched at least that much. Then any repeats smaller than that overlap would be bridged.

First I needed some sample data. In the Bioinformatics Algorithms book Chapter 9, the Ch 9 Epilogue on Mismatch-Tolerant Read Mapping gives a challenge problem includes a 798 KB partial dataset of the bacterial genome Mycoplasma pneumoniae with 816,396 values in it, all either A, C, G or T.

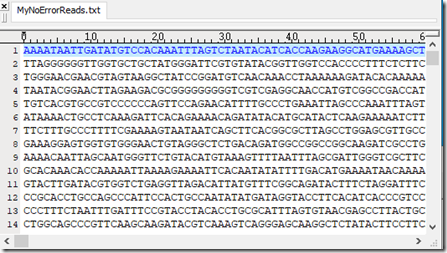

This is what that dataset looks like in my text viewer. It’s just one long line 816,396 values in it:

The challenge problem also included a file of 40,000 short reads from that dataset, all of length 100. That gives 4 million data points for a coverage of 4.9x over the 816,396 in the genome.

However, not a single one of the 40,000 reads were in the genome. The challenge was to find the number of reads that had at most 1 mismatch.

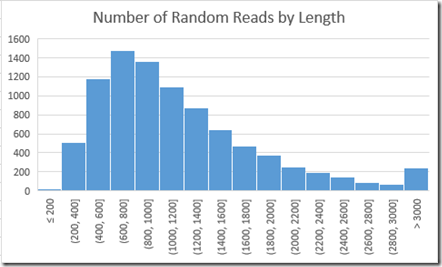

Since I wanted a perfect dataset of reads to start with, I saw that I needed to create my own. Also, I wanted them to be like long reads, all with differing lengths. So after a bit of trial and error, I ended up using a base-10 lognormal distribution, with a mean of 3 and standard deviation of 0.25 to generate 9000 random read lengths. Longest read length was 11,599. Shortest was 124. Mean was 1174.

So those 9000 reads average 1174 bases and total 10.6 million data points, giving 13.0x coverage of the genome, which is quite a bit more than the 4.9x coverage in their example short reads. This is good, because there’s more likelihood I’ll have enough reads to cover the entire genome without gaps.

I then generated random start positions for those 9000 reads, and extracted the actual genome values at that position for that read length, and put those into my own reads file. So now I had a set of reads with no errors to develop with.

This is what my set of reads look like in my text viewer. There are 9000 lines, each of varying length:

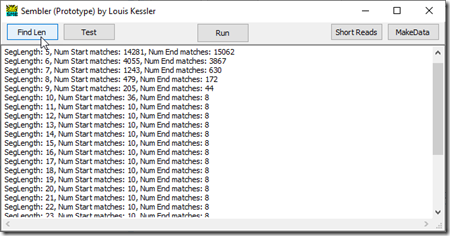

To do the alignment, I didn’t know how much of the start and the end of each read was needed for finding a match in another read. So I wrote a small routine to take the first n positions at the start and end of the first read, and find out how many other reads they are contained in:

I started at length 5. The first 5 values of the first read matched somewhere in 14,281 other reads. Obviously length 5 is too small. Increasing to the first 11 values, we see the start of the first read only matches 10 other reads and the end only matches 8. This does not decrease any more as we increase the segment size indicating that we likely found all the occurrences of that sequence in all the reads. With 13.0x coverage, you would expect on average 13 matches over any segment. I have 1 + 10 = 11 occurrences of the first part of the first read, and 1 + 8 = 9 occurrences of the last part of the first read. That’s a very possible result with 13.0x coverage.

So for this genome and the sample data I have, I’ll set my segment length to 12 and select the first 12 values and last 12 values of each read for my comparisons.

The reason why such a small 12 value segment can be used is because there are 4 possible values, A, C, G and T at each position. And 4 to the power of 12 is 16,777,216 meaning there’s that many ways to make a 12 letter string out of those 4 values. Our genome is only 816,396 bases long, so there is very little chance there are very many segments of length 12 that are randomly included more than once. For a human genome of 3 billion reads, a slightly longer segment to compare with will be required, maybe length 17 or 18 will do it.

Running My Assembler: Sembler

After about 4 days of development, testing, debugging and enhancement, I got my simple assembler running. I call it: Sembler. This version ended up with about 200 lines of code, but half of that is for reporting progress.

So this is its algorithm. Sembler checks the first and last 12 positions of every read against each contig created so far. It also checks the first and last 12 positions of the contig against the read. And it checks if the read is completely in the contig and if the contig is completely in the read. Based on the situation, it will then either expand the contig, combine two contigs, or create a new contig.

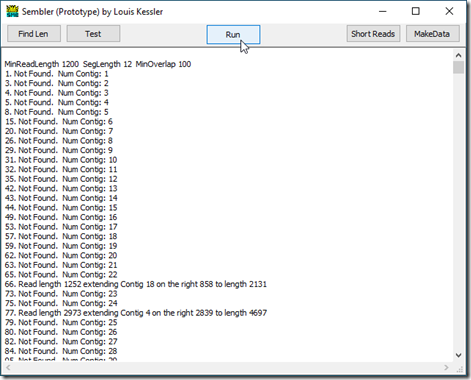

Sembler reports its progress as it goes. Here is how it starts on my test data:

The first line shows the settings used. The next lines show the reads used. Since the minimum read length for this run was 1200, reads 2, 6, 7, 9, … were not used because they were too short.

Up to read 66 no overlaps were found, so a contig was created from each read. At read 66, and again at read 77, the first 12 values of the read matched somewhere in one of the contigs. The rest of the contig matched the read after those 12 values, but the read was longer and had more values available that Sembler then used to extend that contig to the right.

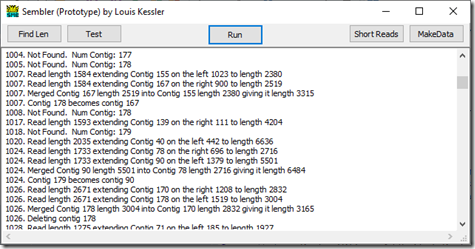

If we go down further to reads starting at 1004 we see:

We have now built up 177 contigs and they grow to a maximum of 179 contigs by read 1018. At this point, the contigs cover much of the genome and it is getting tougher for new reads not to be overlapping with at least one of the contigs.

The start of read 1024 matches somewhere in contig 78 and the end of read 1024 matches somewhere in contig 90. So this read has connected the two contigs. Contig 90 is merged into contig 78, and the last contig 179 is moved into contig 90’s spot just so that there aren’t any empty contigs to deal with.

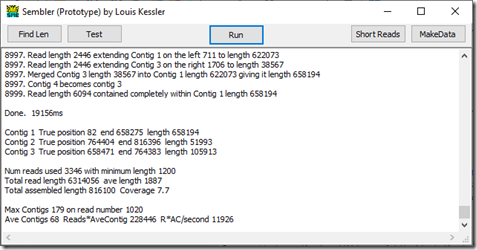

Below is the end of the output from running reads with length >= 1200:

We get down to read 8997 which ends up merging contig 3 into contig 1, and contig 4 becomes contig 3. So we are left with just 3 contigs.

The run took 19.156 seconds.

Normally, you don’t know the genome. This procedure is designed to create the genome for you. But since I am still developing to get this to work, I had Sembler look up the final contigs in the genome to ensure it has done this correctly. The three contigs it came up with were:

Contig 1 from position 82 to 658275

Contig 3 from position 658471 to 764383, and

Contig 2 from position 764404 to 816396.

So positions 1 to 81 were not identified, because there were no reads with length at least 1200 that started before position 82. And there was a small gap of length 195 between 658275 and 658471 which no reads covered and another gap of length 20 between 764383 and 764404 that no reads covered.

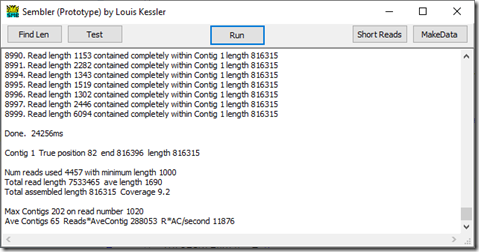

Despite the 7.7x coverage, there were still a couple of small gaps. We need a few more reads to fill in those gaps. One way of doing so is to lower the minimum read length. So I lowered the minimum read length to 1000 and get this:

Success! We now have a single contig from position 82 to 816396.

Optimizing the Assembler

I have so far done nothing to optimize Sembler’s code. The program compares character strings. It uses a Pos function to locate one string within another. There are many ways to improve this to make it run faster, but getting the algorithm working correctly was the first necessity. I have a lot of experience at optimizing code, so if I carry this program further, I will be sure to do so.

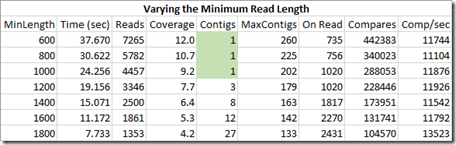

But just as important as optimizing the code is optimizing the algorithm. Starting parameters are very important. Let’s look at what tweaks can be made.

As we increase the minimum length of the reads we include, we reduce the number of reads we are using. This reduces coverage, reduces the number of compares we do and takes less time. The maximum contigs we have to deal with decreases and that maximum happens later during the reads.

But if our value for the minimum length is too high, we don’t get enough coverage to fill in all the gaps and we end up with more than one contig. The most important thing here is to try to end up with just one contig.

Based on the one contig requirement, our optimum for this set of reads for this genome is to select a minimum length of 1000.

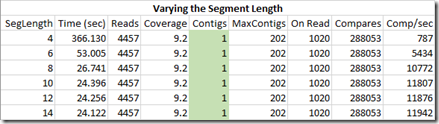

Now let’s set the minimum length to 1000 and vary the segment length:

Varying the segment length we are comparing doesn’t change the result. The segment length is looking for a potential contig it matches to. If the length is too short, then the start or end of the read will match to random locations in each contig. They will be rejected when the rest of the read is compared, which is why the solution doesn’t change. But all these extra checks can dramatically increase the execution time if the segment length is too small.

These are perfect reads I’m working with right now that have no errors. Once errors are considered, we’ll want to keep the seglength as small as possible to minimize the chance that the start segment or end segment contains an error. If it does, then that read will all be rejected when the rest of the read is compared, effectively eliminating the use of that read.

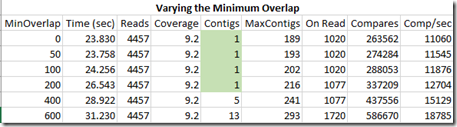

Now let’s put the segment length back to 12 and vary the minimum overlap which by default I had set to 100:

These results surprise me somewhat. I was expecting a minimum overlap of 50 and especially of 0 to fail and give lots of contigs. I’ll have to think about this a bit. Maybe it is because I’m using perfect reads with no errors in them.

None-the-less, this shows that if the minimum overlap is too high, then some of our matches will be excluded causing some gaps. We don’t want the minimum overlap too low, or we may match two contigs that are side by side but don’t have enough “proof” to connect them. That isn’t a problem in this “perfect reads” case, but once errors are introduced, some overlap will likely be wanted as a double check.

Next Steps

This procedure works.

Is it fast enough for a full WGS dataset? We’re talking about tens to hundreds of millions of reads rather than just 9000. And we’re talking about a genome that is 3 billion positions rather than just 800,000. So instead of 200 max contigs, we’ll likely have 200,000 max contigs. So it could theoretically take a million times longer to solve than the little problem I have here.

If with optimization I can get the comparisons to be 20 times faster, then we’re talking a million seconds which is 278 hours, i.e. 23 days. That’s a little bit longer than I was hoping. But this is just a back of the envelope calculation. I’m not multithreading, and there are faster machines this can run on. If a procedure can be made available that will do a full de novo assembly of a human genome in less than 24 hours, that would be an achievement.

I have so far only tested with perfect data. It wouldn’t be too hard to test the addition of imperfections. I could change every 1000th value in my sample reads to something else and use that as a 0.1% error rate like WGS short reads. I could change every 500th for a 0.2% error rate like PacBio HiFi reads. And I can change every 20th for a 5% error rate like WGS long reads. I already have some ideas to change my exact comparison to an approximate comparison that will allow for a specific error rate. The tricky part will be getting it to be fast.

It might be worthwhile running my program as it is against my WGS short reads. I showed above that the minimum overlap may not need to be as high as I originally thought, so maybe the WGS short reads will be able to assemble somewhat. There likely will be many regions that repeats are longer than the short reads are, and this procedure will not be able to span them. The elephant in the room is can I process my entire WGS short reads file in a reasonable amount of time (i.e. 1 day, not 23 days)? And how many contigs will I get? If it will be 200, that will be pretty good, since that will only be an average of 10 per chromosome. But if there’s 2000 contigs, then that’s not quite as good.

I should try to get a set of PacBio HiFi human reads.That is what this procedure is geared towards. PacBio HiFi are the reads that I think with enough coverage, might just result in 25 contigs, one for each chromosome plus Y plus mt. Then it wouldn’t be too hard to add a phasing step to that to separate out those contigs into 46 phased chromosomes + mt for women, or 44 phased chromosomes + X + Y + mt for men. I think the PacBio HiFi reads have a chance of being able to do this.

Finally, I would love to get a set of PacBio HiFi reads for myself. I don’t have my parents with me any more and they never tested, and I’d love to phase my full genome to them. Also, then I can do some analysis and see how well (or poorly) the WGS alignment techniques I did compared to the (hopefully) accurate genome that I’ll have assembled for myself.

Maybe this won’t all happen this year. But I’m sure it will eventually, whether based on my Sembler algorithm, or on some other creation by some of the many hyper-smart bioinformation programmers that are out there.

If PacBio HiFi reads prove to be the revolution in genetic testing that they are promising to be, then for sure the whole world of WGS testing will change in the next few years.

Joined: Thu, 4 Apr 2019

3 blog comments, 0 forum posts

Posted: Sun, 17 Jan 2021

I really enjoyed your explanation of the challenges for assembling a genome. It’s impressive how you learned the complexity of genome assembly and how you were able to write and improve your own program to accomplish this.