Using AI Bots for Programming - Sun, 16 Mar 2025

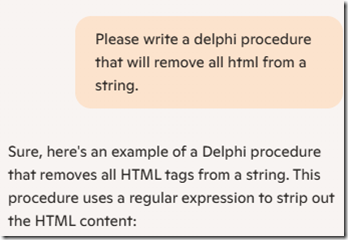

I’m working hard on Version 2 of Behold, and I needed to write some code to strip out HTML from a string. This would be so Behold could display the text embedded in the HTML correctly without formatting.

Since I’m a Windows and Office 360 aficionado, I get a version of Microsoft’s AI bot named Copilot built into my internet browser Edge and my Office products. So that’s the one most convenient for me to use. And I have had good luck asking Copilot programming questions to help me with small tasks in my programming language Delphi in the past.

But this time, this was a task I really didn’t want to do myself. I saw the solution as scanning through the string character by character and parsing out what was html to remove it. Not too difficult, but a bit of a pain to code. So I thought I’d let Copilot try.

Query Number 1

uses

System.RegularExpressions;function RemoveHTML(const Input: string): string;

var

RegEx: TRegEx;

begin

// Create a regular expression to match HTML tags

RegEx := TRegEx.Create(’<[^>]+>’);

// Replace all HTML tags with an empty string

Result := RegEx.Replace(Input, ”);

end;// Example usage:

var

Original, Cleaned: string;

begin

Original := ‘<html><body>Hello, <b>World</b>!</body></html>’;

Cleaned := RemoveHTML(Original);

WriteLn(Cleaned); // Output will be "Hello, World!"

end.

Well that was a surprise to me. A very simple two line function. It uses a mathematical notation called a “regular expression” to find the HTML bits. In all my programming days, I’ve never got to understand them well as I find that once you write one of those regular expressions, such as the “<[^>]+>” that Copilot created, then it is almost impossible to reverse engineer what it does and how it works.

I was a great fan of the APL programming language, and it had the same feature of being impossible to figure out after the fact.

Nonetheless, Copilot created me some code that I could use even if I didn’t fully understand how it worked.

Query Number 2

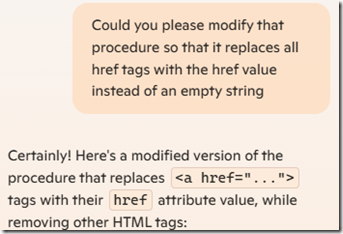

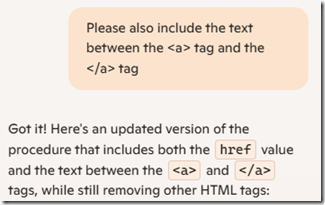

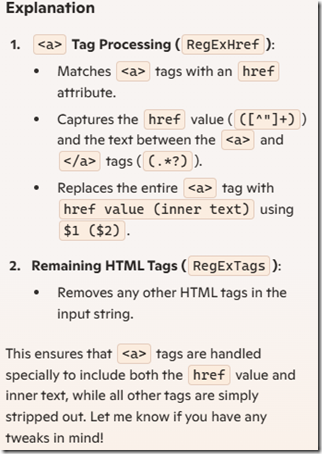

I wanted a slight modification to that. HTML can include urls in its HREF values, and that was information I ddn’t want thrown out, so I then asked Copilot:

uses

System.RegularExpressions;function ReplaceHrefTags(const Input: string): string;

var

RegExHref, RegExTags: TRegEx;

begin

// Regular expression to match href tags and extract href value

RegExHref := TRegEx.Create(’<as+[^>]*href="([^"]+)"[^>]*>’);

// Replace href tags with just their href values

Result := RegExHref.Replace(Input, ‘$1′);// Regular expression to match all other HTML tags

RegExTags := TRegEx.Create(’<[^>]+>’);

// Remove other HTML tags

Result := RegExTags.Replace(Result, ”);

end;// Example usage:

var

Original, Processed: string;

begin

Original := ‘<html><body>Visit <a href="http://example.com">this link</a> for more information.</body></html>’;

Processed := ReplaceHrefTags(Original);

WriteLn(Processed); // Output will be: "Visit http://example.com for more information."

end.

Excellent! Just one more thing.

Query Number 3

uses

System.RegularExpressions;function ProcessHTML(const Input: string): string;

var

RegExHref, RegExTags: TRegEx;

begin

// Regular expression to match <a> tags with href attributes and capture both href value and inner text

RegExHref := TRegEx.Create(’<as+[^>]*href="([^"]+)"[^>]*>(.*?)</a>’);

// Replace <a> tags with "href value (inner text)"

Result := RegExHref.Replace(Input, ‘$1 ($2)’);// Regular expression to match all other HTML tags

RegExTags := TRegEx.Create(’<[^>]+>’);

// Remove remaining HTML tags

Result := RegExTags.Replace(Result, ”);

end;// Example usage:

var

Original, Processed: string;

begin

Original := ‘<html><body>Visit <a href="http://example.com">this link</a> for more information.</body></html>’;

Processed := ProcessHTML(Original);

WriteLn(Processed); // Output will be: "Visit http://example.com (this link) for more information."

end.

I am rather impressed. I ended up with the code that I wanted after only 3 queries to Copilot. And it provided me with the code, an example, and an explanation of how it worked.

I know it would have taken me considerably more than 5 minutes to write that query myself without the use of regular expressions.

Thank you Copilot! Maybe you can’t completely translate my Russian documents for me yet, but it looks like you could be very useful to me for my programming.

Just One More Thing

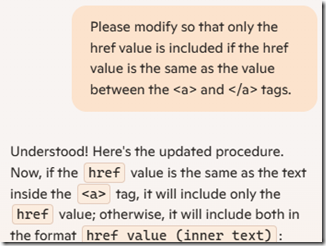

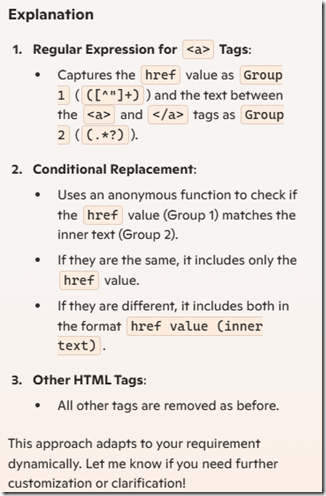

While testing this code on some of my data, I found one more revision was needed:

uses

System.RegularExpressions;function ProcessHTML(const Input: string): string;

var

RegExHref, RegExTags: TRegEx;

begin

// Regular expression to match <a> tags with href attributes and capture both href value and inner text

RegExHref := TRegEx.Create(’<as+[^>]*href="([^"]+)"[^>]*>(.*?)</a>’);

// Replace <a> tags based on the condition

Result := RegExHref.Replace(Input,

function(const Match: TMatch): string

begin

if Match.Groups[1].Value = Match.Groups[2].Value then

Result := Match.Groups[1].Value // Include only href value

else

Result := Match.Groups[1].Value + ‘ (’ + Match.Groups[2].Value + ‘)’; // Include href value and text

end

);// Regular expression to match and remove all other HTML tags

RegExTags := TRegEx.Create(’<[^>]+>’);

Result := RegExTags.Replace(Result, ”);

end;// Example usage:

var

Original, Processed: string;

begin

Original := ‘<html><body>Check out <a href="http://example.com">http://example.com</a> and <a href="http://another.com">this link</a>.</body></html>’;

Processed := ProcessHTML(Original);

WriteLn(Processed); // Output will be: "Check out http://example.com and http://another.com (this link)."

end.

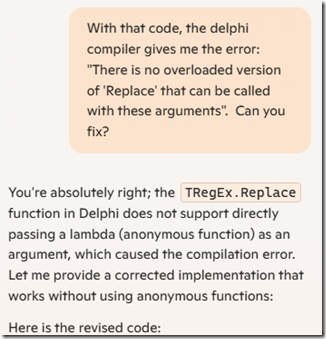

But this time there was a problem. The code would not compile.

Would Copilot be able to fix it?

uses

System.RegularExpressions;function ReplaceHrefTags(const Input: string): string;

var

RegExHref, RegExTags: TRegEx;

Matches: TMatchCollection;

Match: TMatch;

Processed: string;

begin

Processed := Input;// Regular expression to match <a> tags with href attributes and capture both href value and inner text

RegExHref := TRegEx.Create(’<as+[^>]*href="([^"]+)"[^>]*>(.*?)</a>’);

Matches := RegExHref.Matches(Processed);// Process each <a> tag match

for Match in Matches do

begin

if Match.Groups[1].Value = Match.Groups[2].Value then

Processed := StringReplace(Processed, Match.Value, Match.Groups[1].Value, [rfReplaceAll])

else

Processed := StringReplace(Processed, Match.Value, Match.Groups[1].Value + ‘ (’ + Match.Groups[2].Value + ‘)’, [rfReplaceAll]);

end;// Regular expression to match all other HTML tags

RegExTags := TRegEx.Create(’<[^>]+>’);

// Remove all remaining HTML tags

Result := RegExTags.Replace(Processed, ”);

end;// Example usage:

var

Original, Processed: string;

begin

Original := ‘<html><body>Check out <a href="http://example.com">http://example.com</a> and <a href="http://another.com">this link</a>.</body></html>’;

Processed := ReplaceHrefTags(Original);

WriteLn(Processed); // Output will be: "Check out http://example.com and http://another.com (this link)."

end.

And that worked!

Very well done Copilot.

Feedspot 100 Best Genealogy Blogs

Feedspot 100 Best Genealogy Blogs